AutoGAN

An Automated Human-out-of-the-Loop Approach for Training Generative Adversarial Networks

Problem Definition

Training a GAN can be quite complex compared to standard learning algorithms. Unlike optimizing an objective function, GAN training involves a competitive game where one player (the generator) tries to maximize an objective function while the other player (the discriminator) aims to minimize it. This presents a challenge in finding the Nash equilibrium, which is the potential solution to this game. Determining when to stop training a GAN is also a significant challenge, as there is no universally accepted criterion. Researchers often rely on visual inspection or quantitative evaluation metrics like Inception Score (IS) and Fréchet Inception Distance (FID). However, these approaches have limitations, such as the subjective nature of visual inspection and the need for pre-trained models for quantitative evaluation. This project aims to address these challenges and provide an automatic and systematic approach for determining the optimal point to stop GAN training across different data types. The code for this project is publicly available on my GitHub.

The Solution

We introduce an algorithm called AutoGAN, which aims to automate the training of GANs without the need for human intervention. Traditional GAN training can be challenging, requiring human involvement and subjective visual inspection. Moreover, most evaluation metrics are designed for image datasets, limiting the application of GANs to non-image data. AutoGAN addresses these issues by utilizing an oracle, a customizable scoring mechanism that assesses the quality of generated samples.

An oracle is a function that assesses a given generator and assigns a score that reflects the quality of the generated samples. The definition of a “better” sample depends on the specific oracle instance chosen by the end-user. For instance, in some cases, a “better” sample might closely match the dataset’s distribution. However, in other scenarios, it could indicate increased diversity in the data or, in the case of images, higher quality and sharpness.

The algorithm iteratively trains the GAN and evaluates its performance based on an oracle’s scores. AutoGAN allows for temporary performance dips, giving the GAN the opportunity to recover and reach optimal results. Once no further improvement is observed for a specified number of iterations, AutoGAN returns the best-trained GAN model. This algorithm minimizes human intervention and is applicable to various data types, including tabular and image data. Extensive experiments demonstrate the superiority of AutoGAN over GANs trained with manual visual inspection.

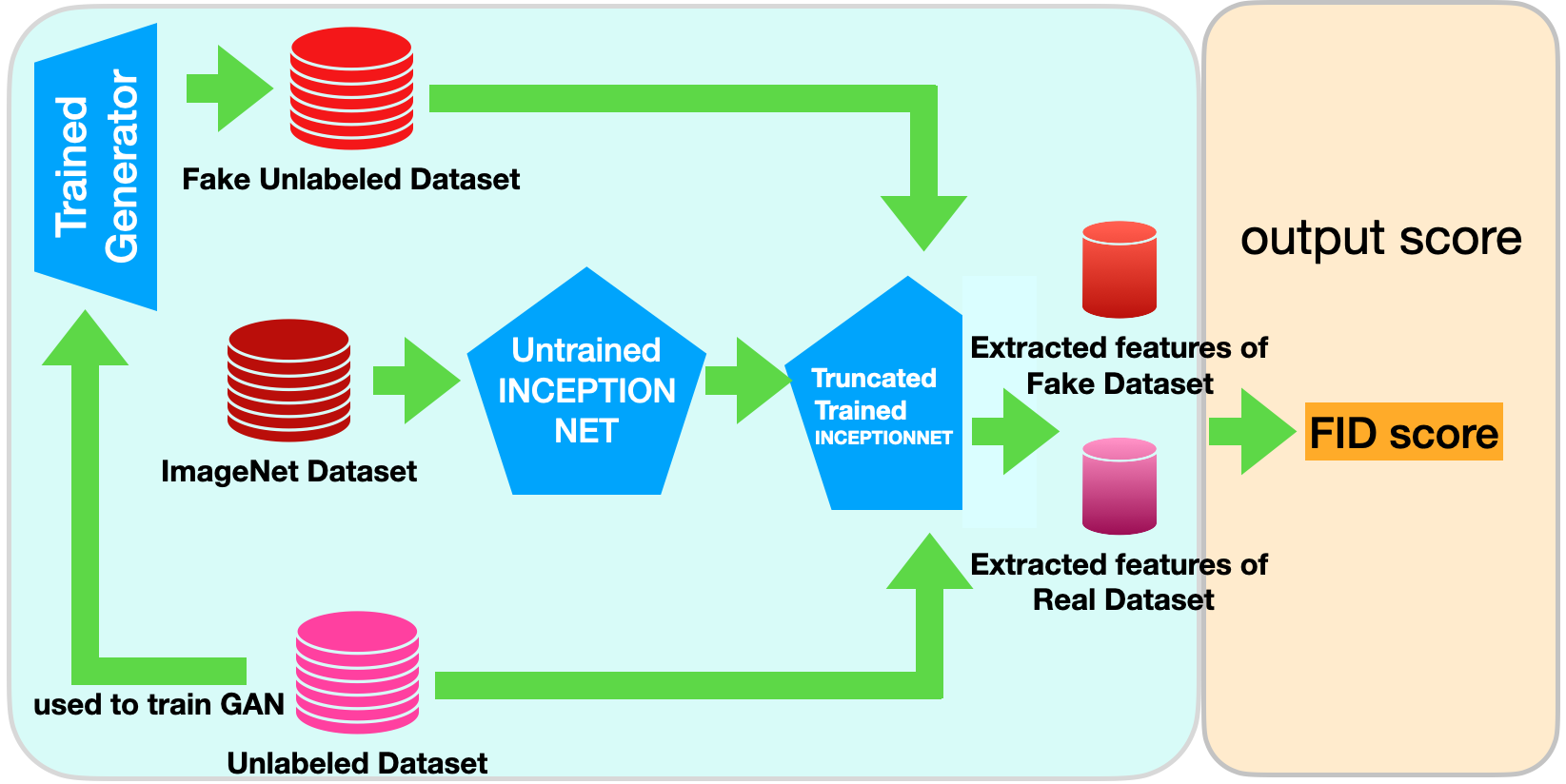

An Oracle Instance

FID oracle instance utilizes a truncated version of the InceptionNet model. Initially, the InceptionNet model is trained on the ImageNet dataset. Then, a GAN is trained on the target real dataset, and the generator component of the GAN generates a specific number of fake samples. Both the real and fake data are processed by the truncated InceptionNet model to obtain their respective InceptionNet-represented features. These features are used to calculate the Fréchet Inception Distance (FID) score. A lower FID score indicates higher quality outputs from the GAN.

A Glimpse into Results

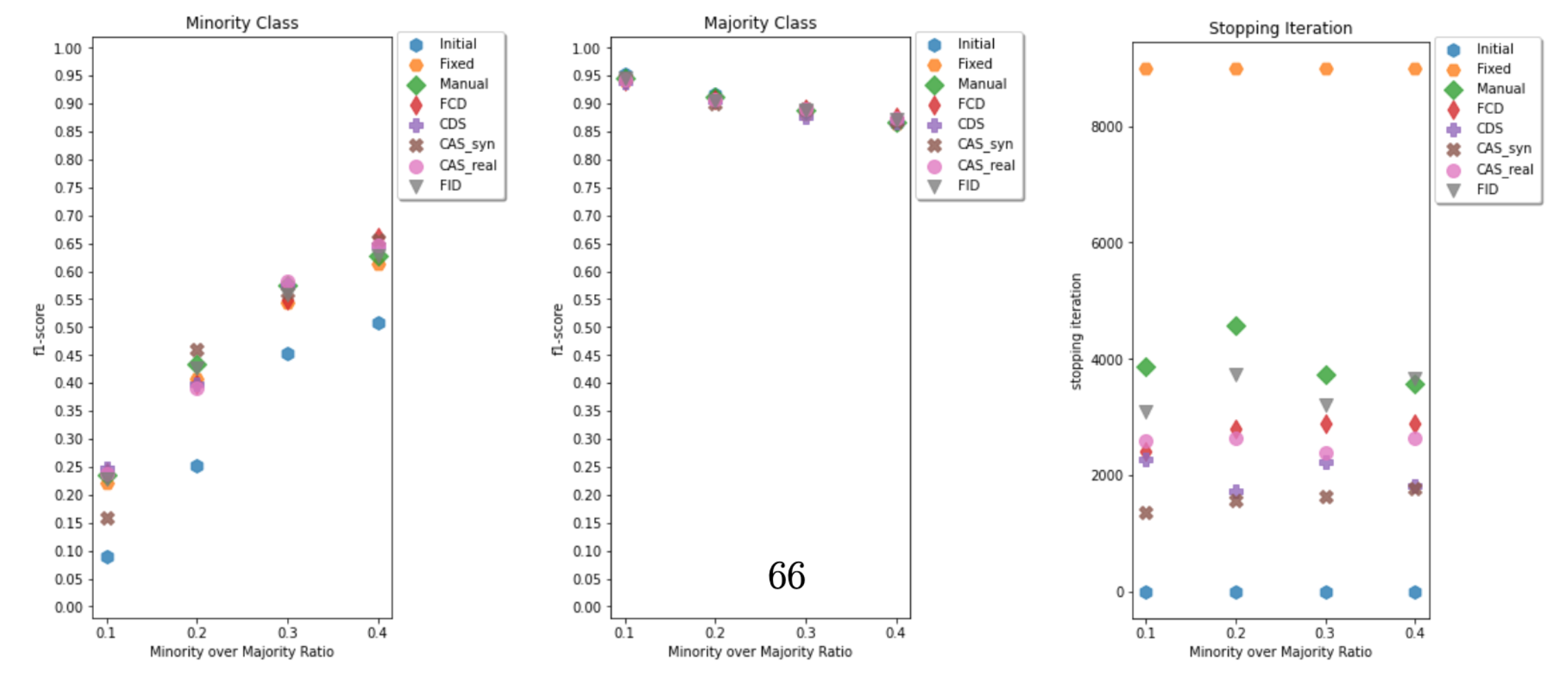

In the figure bellow, a set of the comparative results of using a Conditional GAN trained via different methods, used for Oversampling of an imbalanced binarized dataset based on fashion-MNIST classes 2 and 4 is shown:

In the figure above, all the points, except for the ones labeled as ‘initial’ and ‘Manual,’ represent different runs of AutoGAN using various Oracle instances. We can observe that both the ‘Manual’ method and the AutoGAN method show similar improvements in the minority class after oversampling. This suggests that we have achieved comparable results to a GAN that is manually inspected, using an automated system that operates without human intervention.

The Potentials of the Solution

Exploring the transferability of image-to-image translation techniques to tabular-to-tabular translation or applying image denoising methods to tabular data denoising could be interesting avenues for future research.

The Published Results

To gain a comprehensive understanding of the AutoGAN algorithm and access the extensive results from a wide range of experiments, I kindly refer you to our research paper:

AutoGAN: An Automated Human-out-of-the-Loop Approach for Training Generative Adversarial Networks

For the complete paper details and results, please visit my GitHub repository or check my publication list.